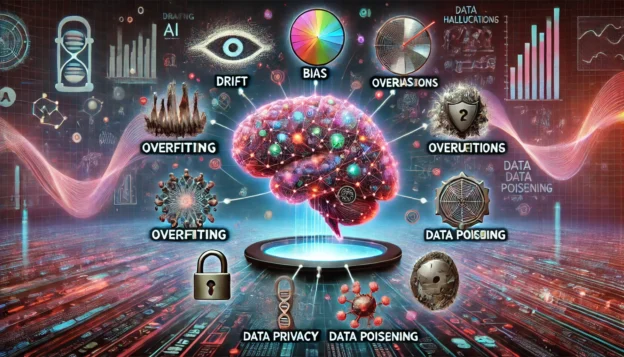

This article explores cognitive dissonance in AI, focusing on the inconsistencies in AI system outputs due to conflicting data, rules, or patterns. It examines key issues such as drift, bias, overfitting, underfitting, explainability, transparency, data privacy, security, hallucinations, and data poisoning. The article also provides strategies for addressing these challenges, emphasizing the importance of continuous monitoring, bias mitigation, model complexity balance, enhancing explainability, robust data governance, and protection against data poisoning. The goal is to develop more reliable, fair, and trustworthy AI systems.

Introduction

Artificial Intelligence (AI) has transformed many aspects of our lives, from personalized recommendations on streaming services to advanced medical diagnoses. However, like any technology, AI is not without its challenges. Among these challenges, cognitive dissonance in AI is a concept worth exploring. This article aims to break down what cognitive dissonance means in the context of AI and explain related issues such as drift, bias, and other pertinent phenomena.

What is Cognitive Dissonance in AI?

Cognitive dissonance, a term traditionally used in psychology, refers to the mental discomfort experienced by a person who holds contradictory beliefs or values. In AI, cognitive dissonance can be understood as inconsistencies in the system’s outputs due to conflicting data, rules, or learned patterns. This dissonance can lead to unpredictable or undesirable behavior in AI systems.

Key Issues in AI

Drift

- Definition: Drift in AI refers to the gradual changes in the statistical properties of the input data over time. This can happen because of changes in user behavior, market trends, or environmental conditions.

- Example: Imagine an AI model trained to predict stock prices based on historical data. If the market undergoes significant changes due to new regulations or global events, the model’s predictions might become less accurate because it was trained on data that no longer reflects the current market conditions.

Bias

- Definition: Bias in AI occurs when the algorithm produces results that are systematically prejudiced due to erroneous assumptions in the machine learning process.

- Example: If an AI hiring tool is trained predominantly on resumes from male candidates, it might develop a bias against female candidates, unfairly favoring male applicants in its recommendations.

Overfitting and Underfitting

- Overfitting: This happens when an AI model learns the training data too well, including its noise and outliers, resulting in poor performance on new, unseen data. The model is too complex and tailored to the specificities of the training data.

- Underfitting: Conversely, underfitting occurs when an AI model is too simple to capture the underlying patterns in the data, leading to poor performance both on the training data and new data.

Explainability and Transparency

- Definition: Explainability refers to the ability to understand and interpret how an AI model makes decisions. Transparency involves making the AI’s decision-making process clear and accessible to users and stakeholders.

- Example: In healthcare, an AI system might predict a patient’s risk of developing a certain disease. If the healthcare professionals cannot understand how the AI arrived at its conclusion, they might be reluctant to trust or act on its recommendations.

Data Privacy and Security

- Definition: Ensuring that the data used by AI systems is protected from unauthorized access and breaches, and that users’ privacy is maintained.

- Example: AI systems often require large amounts of personal data. If this data is not adequately protected, it could be exposed to hackers, leading to potential misuse and privacy violations.

Hallucinations

- Definition: Hallucinations in AI refer to instances where the model generates information or responses that are factually incorrect or nonsensical, despite appearing plausible.

- Example: An AI chatbot might provide a detailed answer about a non-existent historical event or make up scientific facts, leading to misinformation.

Data Poisoning

- Definition: Data poisoning involves intentionally corrupting the training data of an AI model to manipulate its outcomes or degrade its performance.

- Example: Malicious actors might introduce biased data into an AI system used for content moderation, causing it to incorrectly flag or ignore certain types of content.

Addressing These Issues

Continuous Monitoring and Updating

Regularly updating AI models with new data can help mitigate drift. Continuous monitoring ensures that the models remain relevant and accurate over time.

Bias Mitigation Techniques

Implementing strategies such as diverse training data, fairness constraints, and algorithmic audits can help reduce bias in AI systems.

Balancing Model Complexity

Using techniques like cross-validation, regularization, and pruning can help find the right balance between overfitting and underfitting.

Enhancing Explainability

Techniques like model-agnostic methods (e.g., LIME or SHAP) and using simpler, more interpretable models where possible can improve the transparency and explainability of AI systems.

Robust Data Governance

Adopting strong data governance practices, including encryption, anonymization, and stringent access controls, can protect user data and maintain privacy.

Preventing Hallucinations

Implementing stricter validation processes and cross-referencing AI outputs with reliable sources can reduce the occurrence of hallucinations.

Protecting Against Data Poisoning

Using robust data validation techniques, employing anomaly detection systems, and conducting regular audits of the training data can help protect against data poisoning.

Conclusion

Cognitive dissonance in AI, reflected through issues like drift, bias, overfitting, lack of transparency, hallucinations, and data privacy concerns, poses significant challenges. We can develop more reliable, fair, and trustworthy AI systems by understanding and addressing these issues. As AI continues to evolve, ongoing vigilance and proactive management of these challenges will be crucial in harnessing its full potential for societal benefit.