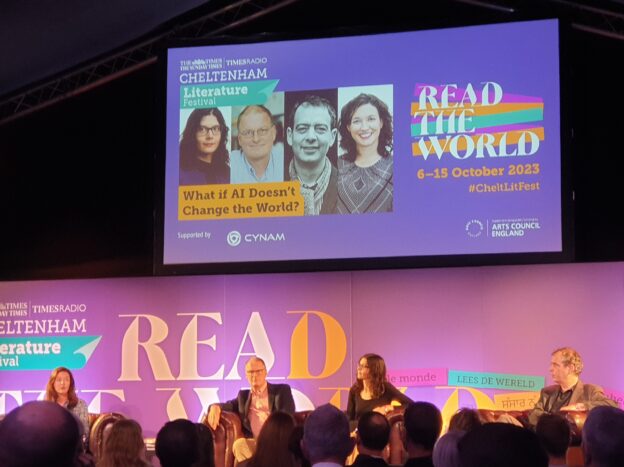

As part of the Cheltenham Literature Festival 2023, in honour of Ada Lovelace Day and as a reaction to a new report from the University of Bristol’s Research Institute for Sociotechnical Cyber Security (RISCS), The Times hosted a discussion panel “What If AI Doesn’t Change the World”. Led by The Times technology business editor Katie Prescott, and with Cambridge Professor of Politics David Runciman, Oxford AI systems expert Michael Wooldridge and AI ethicist Kanta Dihal, looking to explore the promise and peril of AI, asking whether our fears for the future are in fact misplaced? The audience was invited to ask the panel questions, but invariably, they didn’t have time to answer them all. As well as document the event, I thought it would be fun to ask ChatGPT what they thought…

Contents

Precursor: The RISCS ChelTechne Summit 2023 Report

The recent ChelTechne summit, which brought together experts to discuss AI narratives, delivered the RISCS ChelTechne Summit 2023 Report which highlights the need to improve AI discussions. Key findings stress the impact of media narratives on public understanding of AI, suggesting collaboration between scientists and storytellers for more accurate narratives. The report also underscores the importance of diverse narratives, regulatory frameworks, AI literacy, and addressing biases within AI discussions. It aims to foster productive debates and enhance AI understanding.

“What if AI Doesn’t Change the World?” Panel Discussion

The panel was led by Katie Prescott, Technology Business Editor at The Times, and included Cambridge Professor of Politics David Runciman, Oxford AI systems expert Michael Wooldridge and AI ethicist Kanta Dihal. With a challenge to explore AI. The discussion panel were excellent, and they did a great job of presenting a complex subject area for the audience of the Literature Festival. All three have books recently released on AI, which helped tie in the Literature festival. The audience was invited to ask the panel questions, but invariably, they didn’t have time to answer them all. It was great to hear that thanks to the entrance fee to the Literature Festival over 12,000 school kids were able to attend and enjoy learning about new literature and the current literary landscape.

Key Takeaway

My key takeaway was that people are generally not very clued up, and subsequently are kind of scared, and worry about the repercussions of unfettered AI unreservedly. The obvious response to that is to self-educate, to learn more, and to engage with the narrative directly, principally by using AI themselves.

Panel Highlights

Highlights of the panel discussion include:

- Professor David Runciman talked about using ChatGPT to present the first two chapters of his new book in more consumable content.

- Michael Wooldridge telling the audience that ChatGPT creates better “copy” than your typical “Coke-addled Advertising Exec” (snigger).

- Kanta Dihal, who I was personally most impressed with, whose understanding of the topic domain was especially insightful and nuanced when it comes to the uses of AI, versus the popular narrative, and the genuine ethical dilemmas that arise. For example, the AI won’t do anything intrinsically “evil”, “wicked”, or “wrong” itself, although, unrestrained, it would certainly teach a person skills that could be used horrifyingly, like building a pipe bomb.

Panel Downsides

The downsides to the panel discussion and live “Q and A” would be:

- System versus Machine: The over-conflation of the word “machine” with the word “system”, used once or twice nicely underscores the narrative. Trying to outright replace one with the other detracted from the more valid points being presented.

- AI for Personal Use: The advice not to use AI for “personal” purposes, which I felt didn’t relate well to all use cases. For instance, using AI to detect tone and emotion in writing is surprisingly useful to people on the Autistic Spectrum, helping to understand what someone is feeling, and thus, what the actual message is.

- Too much Terminator: Finally the repetitive use of the Terminator as a point of reference for “AI gone bad” and “Rogue AI” (and by implication, SkyNet, the AI in the film, which wasn’t mentioned). I know it’s because it’s such a well-known film, brand, franchise, story, et cetera, but it wasn’t even that good.

- Not enough Anything Else: I was really hoping someone would mention the AI “Allied Mastercomputer” (AM) in Harlan Ellison‘s “I Have No Mouth, and I Must Scream” (note Ellison is credited as a writer on The Terminator, as the story was partially plagiarised from his work), or even maybe the AI “Proteus IV” from Dean Koontz‘s “Demon Seed” (followed by a film four years later, in 1977, staring Julie Christie). Both of these are far more terrifyingly “dark” depictions of AI than Arnie.

- Positive Examples in Fiction: Some positive examples of fictional AI would have been nice too. Maybe Zen, the AI controlling the Liberator in the BBC TV show “Blake’s 7” or even better Orac from the same show (although it’s hard to argue Orac as “positive”).

ChatGPT Responds: The Questions (from Humans) and Answers (from AI)

The audience was invited to ask the panel questions, but invariably, they couldn’t answer them all (in time). I thought it would be fun to ask ChatGPT what they thought… so here are the questions from the audience… but answered by ChatGPT…

- How do we manage the instinct for humans to anthropomorphize AI? Are Chat Bots the most irresponsible medium for AI use, e.g., the recent treason trial?

- Managing anthropomorphism involves educating the public about AI’s limitations and capabilities. Chatbots can be used responsibly if their limitations are clear, but in sensitive contexts like legal trials, caution is necessary.

- Can the world work together on AI? Won’t the likes of China and Russia be intent on using it for repressive purposes?

- International cooperation on AI is vital for ethical development. While some nations may misuse AI, global agreements and ethical standards can help mitigate misuse.

- When it’s as easy for a 13-year-old in Estonia to access AI capabilities as it is for a nation state, how do we encourage ethical use?

- Encouraging ethical AI use among individuals requires education, regulation, and responsible AI design to prevent misuse.

- Would AI ever be able to “develop a conscience,” or will it relentlessly pursue the tasks set to it by its controllers, irrespective of moral right or wrong?

- AI lacks consciousness; it follows its programming. Ethical behavior in AI depends on how it’s designed and the values instilled by its creators.

- How big is the current skills gap in the workforce to ensure AI is used effectively?

- There’s a significant skills gap in AI, with a demand for AI experts. Closing this gap is crucial for responsible AI implementation.

- Are there any concerns around accidental abuse of AI, i.e., starting with good intentions but losing control of AI? Could this have happened already?

- Yes, there are concerns about AI safety and unintended consequences. Instances of AI systems making biased or harmful decisions have already occurred, highlighting the need for better safeguards.

- Since AI is owned by corporations, it’s not democratized but instead in the hands/control of companies who are only incentivized to make a profit?

- The corporate control of AI raises concerns about transparency and fairness. Striking a balance between innovation and public interest is a challenge that needs addressing.

- Can the panelists answer the question “what if AI doesn’t change the world”?

- If AI doesn’t change the world, it will still have significant impacts, but they may be less transformative than anticipated. AI’s potential benefits and risks require careful consideration.

- Currently, AI is extremely biased. Do you see any opportunities for AI to help with better inclusion and equality for minority individuals?

- AI can be a tool for improving inclusion and reducing bias when designed and used with diversity and fairness in mind.

- Do you anticipate changes to copyright laws due to models such as GPT-4, DALL·E, etc.?

- AI-generated content poses copyright challenges, and legal frameworks may need to adapt to address these new issues.

Summarised Themes

When examining the questions and answers provided, several themes emerge, I’ve summarised them here.

- Ethics and Responsibility of AI

- Many questions revolve around the ethical use of AI, including concerns about bias, control, and accountability.

- Answers emphasize the importance of educating users, developing ethical AI design, and considering the unintended consequences of AI technology.

- Global Cooperation and Competition

- Questions raise concerns about global cooperation in AI development, with a focus on the potential for misuse by nations like China and Russia.

- The answers highlight the need for international collaboration, standards, and agreements to ensure responsible AI use worldwide.

- Access and Democratization

- Some questions address the accessibility of AI, both for individuals and nation-states, and the implications for ethical use.

- Responses emphasize the importance of regulating access, promoting responsible use, and addressing the skills gap to democratize AI’s benefits.

- AI Consciousness and Behavior

- Questions inquire about the possibility of AI developing a conscience or ethical behaviour.

- Answers clarify that AI lacks consciousness and that its behaviour depends on its programming and the values instilled by its creators.

- Corporate Control and Profit Motives

- Concerns are raised about AI’s ownership by corporations and the potential conflict between profit motives and public interest.

- Responses acknowledge the need to balance innovation and ethical considerations in AI development.

- AI’s Impact on Society

- Questions ponder AI’s transformative potential and its impact on society, including concerns about biased AI and the changing nature of human intelligence.

- Answers suggest that AI will have significant impacts, whether transformative or not, and that addressing biases and educating society are essential.

- AI Safety and Control

- Concerns about AI safety, accidental abuse, and loss of control are evident in the questions.

- Responses emphasize the importance of AI safety measures, responsible development, and proactive risk mitigation.

- Legal and Regulatory Challenges

- Questions touch on legal challenges posed by AI, such as copyright issues related to AI-generated content.

- Answers suggest that legal frameworks may need adjustments to address emerging AI-related challenges.

- Education and Awareness

- Throughout the questions and answers, the theme of education and awareness consistently arises as a solution to many AI-related challenges.

- Educating individuals, organizations, and policymakers is seen as crucial for responsible AI use.

- Future Uncertainty

- The uncertainty of AI’s future impact on society and the difficulty in imagining non-dystopian scenarios are recurrent themes.

- Answers stress the importance of responsible development, ethical considerations, and vigilance in shaping AI’s future.

Conclusion

It was an interesting event and engaging panel led by Katie Prescott, which highlighted the concerns that the general public has on AI. These themes underscore the multifaceted nature of AI discussions, highlighting the need for greater education, interdisciplinary approaches, collaboration, and ongoing dialogue to address the complex ethical, societal, and technological issues surrounding AI.