Twitter has recently made the source code for its recommendation algorithm public, revealing the elements it considers when curating your personalized “For You” timeline.

Let’s delve into the top 10 insights we’ve gained about the Twitter algorithm and the strategies you should employ to achieve virality:

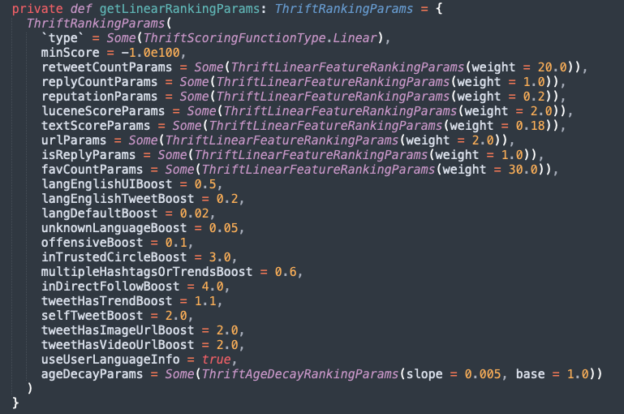

- Prioritize Likes: Likes contribute more significantly to your Tweet’s reach than retweets or replies. Aim to create content that encourages your followers to hit the ‘like’ button. This boosts your Tweets by 30x as compared to Retweets (20x boost) or Replies (1x boost).

- Maintain a Positive Reputation: Twitter’s algorithm assigns a Reputation Score to your account. Ensure your content isn’t offensive or harmful, as any negative user actions like blocking, reporting, muting, or unfollowing can lead to a downranking of your content.

- Minimize Hashtag Use: Excessive use of hashtags can lead to your content being downranked. While hashtags originated on Twitter, it’s recommended to use a maximum of one hashtag per tweet, or none at all, if possible.

- Engage with Trending Topics: Posting about current trends can give your content a boost. Be aware of what’s trending and find creative ways to incorporate these topics into your tweets.

- Use Visual Media: Adding images or videos to your tweets doubles their visibility according to the algorithm, as compared to text-only content.

- Limit External Links: External links in tweets could be flagged as spam, leading to downranking. Unless your content has high engagement, it’s best to limit the use of external links.

- Maintain a Healthy Follower-to-Following Ratio: Having a disproportionately low number of followers compared to the number of accounts you follow can lead to a downranking of your account. Unfollow accounts that aren’t relevant to you to maintain a balanced ratio.

- Check Your Spelling: Tweets with unrecognized or misspelt words can significantly lower your ranking. Make sure to proofread your tweets before posting.

- Stick to Your Niche: The algorithm categorizes accounts based on their content. Posting about topics outside of your typical subject matter can lead to downranking.

- Consider Twitter Blue: Twitter Blue subscribers receive a boost of 2-4x on their tweets depending on whether the viewer is in the same network/niche.

Thanks to this article for the summary: https://genflow.com/blogs/how-the-twitter-algorithm-works-in-2023