In today’s competitive business landscape, identifying the right customers can make all the difference between sustainable growth and wasted resources. This is where an Ideal Customer Profile (ICP) comes into play. An ICP allows businesses to target organizations that are the perfect fit for their products or services, improving sales efficiency and overall customer satisfaction.

Continue reading

Waste, Luxury, and the Human Condition: A Reflection on Peacocks, Ferraris, and the Nature of Excess

This article explores the parallels between the extravagant plumage of peacocks and the luxury of Ferraris, questioning the purpose and value of waste and excess. While a peacock’s feathers serve a biological function by signaling genetic fitness, a Ferrari in central London is a symbol of conspicuous consumption, displaying wealth rather than evolutionary advantage. The article delves into the complexities of waste in human society, examining how luxury can signal success but also reflect social inequality and environmental degradation. Ultimately, it argues that waste must be balanced with meaningful values to avoid becoming destructive.

Continue reading

ICP vs. Persona: What’s the Difference?

In sales and marketing, two concepts often come up when discussing customer targeting: the Ideal Customer Profile (ICP) and the Buyer Persona. While these terms are sometimes used interchangeably, they serve distinct purposes and focus on different aspects of your target audience. Understanding the difference between the two is crucial for aligning your marketing and sales efforts effectively.

Continue reading

What is an ICP in Sales and Why is It Essential?

In the world of sales, focusing on the right prospects is key to success. One of the most important tools that sales teams use to achieve this is the Ideal Customer Profile, or ICP. But what exactly is an ICP, and why does it matter so much in today’s competitive marketplace?

Continue reading

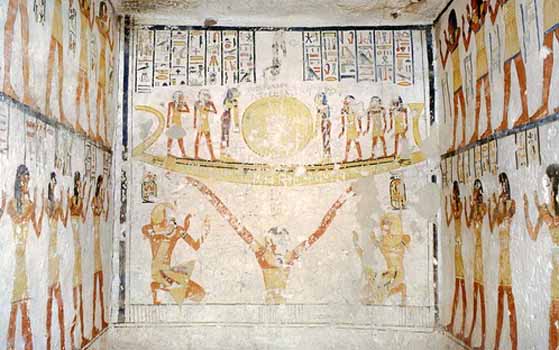

The Ogdoad and Their Influence on Later Egyptian Gods

The Ogdoad, an ancient group of eight primordial deities, played a foundational role in Egyptian cosmogony, symbolizing chaos and pre-creation forces. Worshipped in Hermopolis, they represent key elements like water, infinity, darkness, and hiddenness. These forces later influenced the emergence of more defined gods, such as Ra and Amun, who organized chaos into divine order. The Ogdoad’s themes of chaos, rebirth, and transformation shaped Egyptian religious thought, influencing creation myths and the balance between order and chaos, light and dark, and life and death.

Continue reading

Mastering Product-Market Fit: Insights from Rob Snyder’s Startup Playbook

Achieving product-market fit is one of the hardest challenges for startups. Rob Snyder’s session at “Cyber Runway: Scale” provides a practical guide to navigating the “pain cave” and building repeatable success by focusing on customer demand, case studies, and quick iterations.

Continue reading

Expanding into the US: Lessons Learned and Pitfalls to Avoid from Paul Barnes

Expanding internationally can be a game-changer for startups, but the process comes with unique challenges. Paul Barnes of Overe shared his experience on navigating the “Delaware Flip,” managing US tax complexities, and building the right legal and financial foundation to set a company up for success in the US.

Continue reading

Scaling in a Competitive Market: Lessons from Al Paterson’s Startup Journey

In this candid fireside chat, Al Paterson shares valuable insights on raising capital, expanding into new markets, and managing a startup in today’s competitive landscape. Learn why timing, strategic fundraising, and choosing the right location for your team are key to scaling successfully.

Continue reading

Building Cybersecurity for SMEs: Lessons from Cyber Smart’s Jamie Akhtar

In this fireside chat, Jamie Akhtar shared the journey of building Cyber Smart, his lessons in scaling, fundraising, and pivoting the business to focus on cyber hygiene for SMEs. Learn how he navigated the ups and downs of the startup world.

Continue reading

The Life and Times of Brion Gysin: Multi-Dimensional Artist from Teenage Surrealist to Multimedia Wunderkind

Brion Gysin, an artist, writer, and key figure in the 20th-century avant-garde, straddled multiple artistic movements, leaving a profound influence on literature, art, and music. This article explores Gysin’s early fallout with the Surrealists, his pivotal collaboration with the Beat Generation, and his role in introducing the Master Musicians of Joujouka to the West. It highlights his invention of the Dreamachine, his development of the cut-up technique with William S. Burroughs, and his influence on musicians like Brian Jones and David Bowie. Gysin’s legacy of experimentation, mysticism, and boundary-pushing creativity endures, despite his battles with cancer in his later years.

Continue reading

Waste, Luxury, and the Human Condition: Intersectionality of Violet Paget’s Satan the Waster, Siegfried Sassoon’s At the Cenotaph, and Rory Sutherland’s views on Ferraris in London

While browsing YouTube Shorts, mainly for Tacticus Tips and Warhammer 40K fan fiction, I stumbled upon a video featuring Rory Sutherland discussing the absurdity of Ferraris in central London. His thoughts on waste reminded me of a book I encountered in the school library at KEGS Aston around 1983: Vernon Lee’s Satan the Waster. This article discusses the nature of waste, drawing a comparison with Siegfried Sassoon’s At the Cenotaph. Through this lens, it explores how both Sassoon and Violet Paget (writing as Vernon Lee) critique the senselessness of war, using waste as a symbol for the destruction of human life, resources, and potential, much like how ridiculous luxury goods are symbols of impractical extravagance.

Continue reading

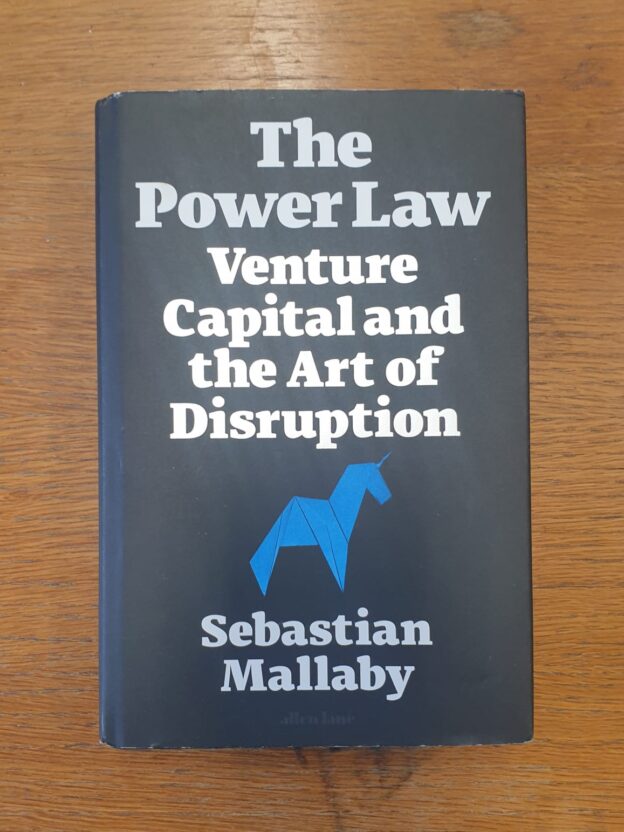

The Power Law in Venture Capital: A Deep Dive into Sebastian Mallaby’s Insights

In this insightful blog article, we dive into Sebastian Mallaby’s The Power Law, which explains how venture capitalists operate in a world of high risks, where a small number of winning startups provide massive returns that outweigh the majority of failures. Through a blend of storytelling, research, and critical analysis, this review highlights the triumphs and pitfalls of the venture capital industry, offering suggestions for a more ethical and inclusive future. If you’re intrigued by the intersection of finance, tech innovation, and global economics, this article is a must-read.

Continue reading

Cracking Government and SME Markets: Customer Acquisition Insights from Adie Holt

Adie Holt explored the complexities of working with government and SME clients, offering practical advice for navigating long sales cycles, building strategic relationships, and overcoming procurement challenges in the Cyber Runway: Scale accelerator.

Continue reading

Navigating the Defence and National Security Sector: Lessons from James Gayner MBE

James Gayner MBE provides a comprehensive overview of how startups can engage with the defence and national security sectors. Learn how to navigate the complex procurement landscape, build relationships with prime contractors, and overcome the hurdles faced by SMEs in this challenging but rewarding space.

Continue reading

Mastering Product and Commercial Fit: A Guide for Startups with Nick Gardner

Nick Gardner’s workshop in the Cyber Runway: Scale program explored the elusive concept of product-market fit (PMF) and how startups can practically identify and maintain it. Learn how to target the right customer segments and adjust your market strategy for sustainable growth.

Continue reading

How to Identify the Moment You’ve Achieved Product-Market Fit: Insights from Dave Palmer

This article delves into Dave Palmer’s actionable advice on how startups can know when they’ve truly achieved product-market fit (PMF). Discover the key moments that signal PMF and learn how to overcome the inevitable challenges along the journey.

Continue reading

A Lucky Tombola Win and the Start of My Midlands Bonsai Society Journey

About 15 years ago, I won a large Jade bonsai at a raffle during a visit to the Birmingham Botanical Gardens with my sons. Over time, caring for the bonsai became more of a duty than a passion, but recently, I joined the Midlands Bonsai Society (MBS) for guidance on how to properly look after it. With help from members, I learned about techniques such as back budding and successfully repotted the bonsai. My initial journey with bonsai care has been rewarding, and I look forward to continuing this process with the support of the MBS.

Continue reading

MapReduce: A 20-Year Retrospective on How Jeffrey Dean and Sanjay Ghemawat Revolutionised Data Processing

This article provides a retrospective on the 20th anniversary of Jeffrey Dean and Sanjay Ghemawat’s seminal paper, “MapReduce: Simplified Data Processing on Large Clusters”. It explores the paper’s lasting impact on data processing, its influence on the development of big data technologies like Hadoop, and its broader implications for industries ranging from digital advertising to healthcare. The article also looks ahead to future trends in data processing, including stream processing and AI, emphasising how MapReduce’s principles will continue to shape the future of distributed computing.

Continue reading

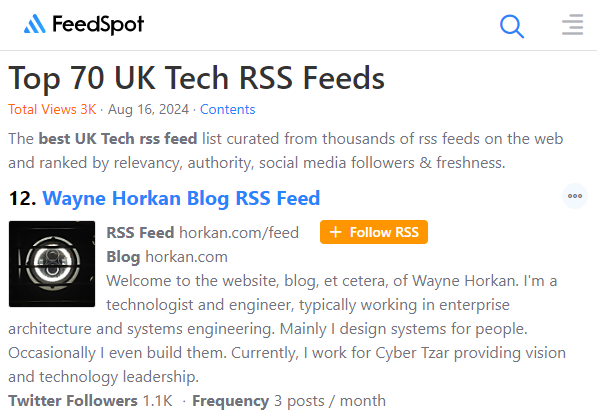

Celebrating a Milestone: Horkan.com Reaches 12th on Feedspot’s UK Tech Blog List

I’m pleased to share that Horkan.com has been recognised as the 12th most widely read tech blog from the UK by Feedspot, based on RSS feed aggregation in their recent article “Top 70 UK Tech RSS Feeds“. This acknowledgement is a significant moment for me, reflecting a journey that began over 17 years ago.

Continue reading

Revisiting the Cloud Security Initiative: Reflections on the Journey

In 2009, I spearheaded an initiative to establish a cloud security working group aimed at addressing the sovereignty and security of UK data within the cloud. The goal was to create a cross-sector collaboration involving both public and private entities, intending to ensure that the UK maintained control over its critical data as cloud computing emerged as a pivotal part of the national infrastructure.

Continue reading